📰 August 10th, 2023. Issue #17

The following illustrates the disparities between testing in machine learning and conventional software testing, highlighting the insufficiency of solely evaluating model performance. It imparts knowledge on testing machine learning models, along with essential principles and recommended practices to adhere to.

Testing Difficulties for ML Models

Software developers craft code to achieve deterministic behavior. Testing specifically pinpoints failing code sections and offers a relatively cohesive measure of coverage, such as tracking lines of code that are exercised. This process serves two key purposes:

Quality assurance: It determines if the software aligns with the established requirements.

Detection of defects and flaws: Both during the development phase and while the software is in production.

In contrast, data scientists and ML engineers train models through example inputs and parameter tuning. The behavior of these models is derived from their training logic. Testing ML models presents the following challenges:

Lack of transparency. Many models work like black boxes.

Indeterminate modeling outcomes. Many models rely on stochastic algorithms and do not produce the same model after (re)training.

Generalizability. Models need to work consistently in circumstances other than their training environment.

Unclear idea of coverage. Defining testing coverage for machine learning models lacks a standardized approach. In machine learning, the concept of "coverage" doesn't align with the notion of lines of code, as seen in software development. Instead, it could encompass concepts such as input data diversity and the distribution of model outputs.

Resource needs. Continuous testing of ML models requires resource and is time-intensive.

These challenges create complexity in comprehending the factors contributing to a model's subpar performance, making result interpretation arduous. Additionally, ensuring the model's functionality persists amidst alterations in input data distribution (data drift) or shifts in the relationship between input and output variables (concept drift) becomes uncertain.

Evaluation vs Training

Numerous professionals might exclusively depend on performance evaluation metrics for their machine learning models. Nonetheless, it's crucial to discern the distinction between evaluation and testing.

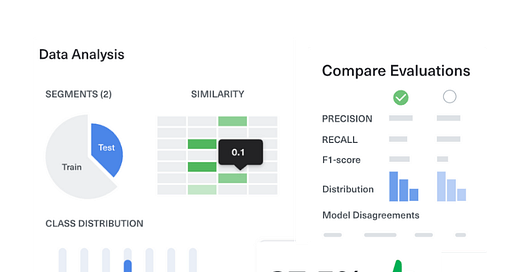

The evaluation of an ML model centers on its holistic performance. This assessment often encompasses performance metrics, curves, and even instances of erroneous predictions. Evaluating the model in this way proves invaluable for tracking its progress across different iterations. It's important to note, however, that such evaluation doesn't delve deeply into the underlying causes of failures or the precise behaviors exhibited by the model.

In contrast, machine learning testing extends beyond the assessment of model performance on data subsets. It guarantees the cohesive functioning of all components within the ML system, striving to attain the intended high-quality outcomes. This process aids teams in identifying imperfections within the code, data, and model, enabling targeted resolutions.

How-to: Testing Machine Learning Models

Numerous current testing practices for ML models rely on manual error analysis, such as the categorization of failure modes. However, these practices tend to be slow, costly, and prone to errors. An effective testing framework for ML models should formalize and streamline these procedures.

It's feasible to align software development test categories with Machine Learning models by adapting their principles to Machine Learning behavior:

Unit test: Validates the accuracy of individual model components.

Regression test: Ensures the model remains intact and seeks out previously identified bugs.

Integration test: Verifies the compatibility of various components within the machine learning pipeline.

Various testing tasks can fall under distinct categories (model evaluation, monitoring, validation) based on your specific problem, context, and organizational structure. This newsletter concentrates on tests specifically tailored to the Machine Learning modeling problem (post-training tests), omitting coverage of other test types. Ensure you integrate your machine learning model tests into your wider management framework.

Testing Trained Models

When dealing with code, you can create manual test cases, but this approach isn't optimal for Machine Learning models due to the challenge of encompassing all edge cases within a multi-dimensional input realm.

Instead, assess model performance through methods like monitoring, data slicing, or property-based testing, all tailored to real-world issues.

Invariance Tests

The invariance test establishes alterations to inputs that should ideally not impact model outputs.

A prevalent approach for assessing invariance involves leveraging data augmentation. This entails pairing adjusted and unaltered input samples and gauging the resulting influence on the model's output.

Consider the scenario of examining whether an individual's name influences their health. Initially, the assumption could be that no connection exists between the two. If a test contradicts this assumption, it could suggest a concealed demographic correlation between name and height (owing to the dataset encompassing diverse countries with distinct name and height distributions).

Directional Expectation Tests

Conducting directional expectation tests aids in establishing how alterations in input distribution are anticipated to impact the output.

Illustratively, consider assessing assumptions concerning the count of bathrooms or property dimensions when predicting house prices. A logical expectation is that a greater number of bathrooms would correlate with a higher price prediction. Any deviation from this anticipated outcome could uncover misconceptions about the connection between input and output, or highlight biases in the dataset's distribution (such as an overrepresentation of small studio apartments in affluent neighborhoods).

Minimum Functionality Tests

The minimum functionality test aids in determining if individual model components exhibit the expected behavior. The rationale behind these tests lies in the fact that overarching performance based on output can mask crucial impending problems within the model.

Here are approaches to examine individual components:

Generate samples that the model finds exceptionally easy to predict, aiming to assess its consistent delivery of these predictions.

Assess data segments and subsets that fulfill certain criteria (e.g., evaluating a language model solely on short sentences to gauge its proficiency in predicting such sentences).

Conduct tests targeting failure modes that were identified during manual error analysis.

Conclusion

Evaluating an entire model demands considerable time, particularly when performing integration tests.

To optimize resource usage and expedite testing, focus on examining compact components of the model (for instance, verifying if a single gradient descent iteration results in loss reduction) or employ a reduced dataset. Alternatively, utilize less complex models to promptly identify shifts in feature relevance and pre-emptively detect concept drift and data drift.

Regarding integration tests, maintain a consistent cycle of basic tests with each iteration while reserving more extensive and time-consuming tests to run in the background. This approach ensures continuous verification while accommodating more comprehensive assessments.