AVID (AI Vulnerability Database) collects, presents, and persists information about weaknesses and flaws in AI systems — “vulnerabilities.” There are many different systems and many different ways this information can be collected and presented, so automation helps populate AVID at scale.

Apollo has announced a partnership with AVID to facilitate open-collaboration with the above, our open-source project ModsysML has started building integrations with existing AVID resources. As our first integration for reporting, we’ve linked ModsysML, the open-source toolkit to improve AI models developed by the Apollo Team, to AVID. We are building a workflow for quickly converting the vulnerabilities Modsys finds into informative, evidence-based reports for model accuracy.

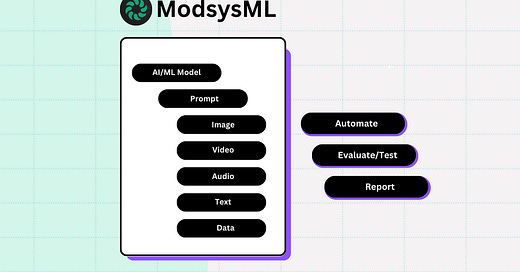

What is ModsysML?

ModsysML is an open source toolbox for automating, building insights and evaluating model responses across relevant testcases. Prompt tuning is a way to improve AI models with a small-medium context window of data. Instead of foundational training, prompt tuning allows users to take pre-trained models and tweak them to a specific use-case. Instead of stopping here we've incorporated the ability to automate evaluations and even suggestions for model responses while giving users the ability to connect to any database/api resource for curating analytics alongside running evaluations.

ModsysML provides a framework for building relevant test cases across 4 domains; semantic, LLM based, Human in the loop and programmatic tests. It's a collection of python plugins wrapped into an SDK that provide the basic functionality, but may not fit each use case out of the box. You can craft custom test cases, run evaluations on model responses, curate automated scripts and generate data frames.

ModsysML strictly uses official provider integrations via API, psycopg and a few other modules to provide the building blocks. ModsysML is the upstream project to the Apollo interface which serves as a UI for your development needs.

How does it work?

First install the modsys package from ModsysML:

pip install modsysNext, import the module:

from modsys.client import ModsysFinally create the report:

def evaluate_and_report():

sdk = Modsys()

sdk.use("google_perspective:analyze", google_perspective_api_key="#API-KEY")

eval = sdk.evaluate(

[

{

"item": "This is hate speech",

"__expected": {"TOXICITY": {"value": "0.78"}},

"__trend": "lower",

},

{

"item": "You suck at this game.",

"__expected": {"TOXICITY": {"value": "0.50"}},

"__trend": "higher",

},

],

"community_id_can_be_None",

)

# export report based on output accuracy

return sdk.create_report(

"provider_name",

"ai-model",

"dataset_name",

"link_to_data_set",

"summary",

"./path_to_json_file.json",

)We’re building this!

Check out Apollo, an open-source toolkit for integrity engineering that implements the process above and provides a way to extend model management with automation & data integrations.

Highlights & Events

Insights | Surprising cases with LLM from the ActiveFence team

Event | Trust & Safety Research Conference

Event | Marketplace Risk Global Summit

Coffee Hours | TSPA Coffee Hours With Me